Cloud computing offers many benefits: scalability, increased collaboration, better cost control, ensured security and business continuity, as well as accessibility—you can work from anywhere, which is very important in the era of remote work. One of our clients wanted to harness all of these benefits and asked us to help them plan a cloud computing infrastructure.

To give you a broader context, planning cloud infrastructure was a part of a larger project. Our client wanted to build a web app automating business processes at the company. They wanted to deploy it in the cloud, so they needed to build cloud infrastructure. Since they did not have experience in this topic, they asked us for help. So, the overall project consisted of the following parts:

- developing a web app,

- planning cloud infrastructure for deployment,

- advising the client on how to manage cloud resources effectively,

- setting up the software development process of cloud apps.

Public cloud vs private cloud vs hybrid cloud

Let’s make one thing clear right from the beginning. When talking about cloud infrastructure, we mean the public cloud, i.e., cloud computing services offered by a third-party provider over the public Internet and available for anyone who is willing to use or purchase them.

In fact, the public cloud is a multi-tenant environment, i.e., the same computing resources (e.g., storage, processing power, databases) are shared among many clients. Cloud infrastructure is owned and maintained by a specialized third-party cloud provider that offers it through a subscription or pay-to-use model. This means that virtually anyone can use the cloud computing resources offered by a public cloud provider, similar to buying utility services such as electric power or mains water.

A private cloud, on the other hand, offers computing services over the Internet or a private internal network that are available only to selected users and not to the general public. This means that software and hardware resources are exclusively available to a single client.

A private cloud, sometimes also called an internal or corporate cloud, has the same benefits as a public cloud (scalability, increased collaboration, and accessibility), but also offers additional features such as access control, enhanced security, and resource customization. These are not usually offered by public cloud providers.

There is also a third option called a hybrid cloud, which is a single cloud environment that integrates public and private cloud infrastructures. As a matter of fact, the hybrid cloud model is widespread among enterprises that can choose the optimal cloud environment for their applications or workloads. For example, sensitive data can be stored in their on-premises data center, while using a public cloud to access additional computing resources at peak times.

The major public cloud providers are Amazon Web Services (AWS), Microsoft Azure, Google Cloud, and Alibaba Cloud. Amazon, Google, and Microsoft also provide private cloud services. Other key players in the private cloud market are IBM (which also offers a public cloud), Dell, VMware, Cisco, Hewlett Packard Enterprise (HPE), SAP, and OpenStack.

Once we have explained the basic notions, we can start planning the cloud infrastructure for our web app development.

Components of cloud infrastructure

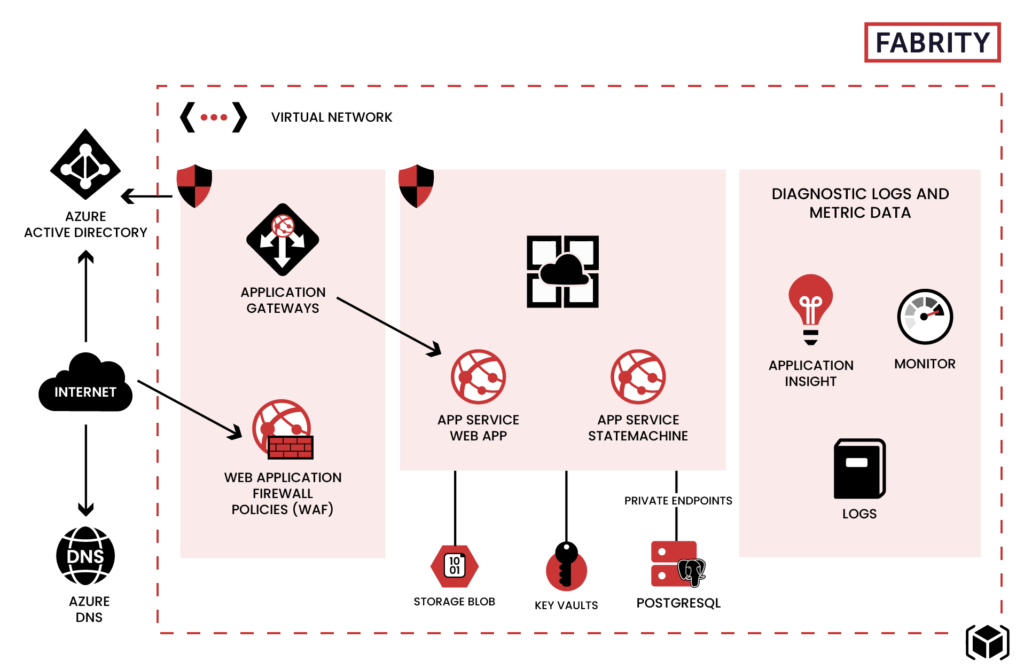

We decided to choose Microsoft Azure as a cloud infrastructure to build and deploy our web app (and all other web apps in the future). The main system component consisted of the Azure App Service that would be hosting main the web application and other services, e.g., a state machine responsible for a process automation feature.

A database was based on Azure Database for PostgreSQL. This allowed us to use collected data both in the Power BI reports and in Azure Synapse Analytics data warehouse.

A division into testing and production environments was performed at the level of different resource groups. In this way, it was much easier to manage permissions and services included in individual environments at the infrastructure level.

To ensure system security, we proposed dividing virtual networks into zones, as well as to use API Gateway, i.e., one central place where you can put authentication, monitoring, and other mechanisms for specific web services. Using API Gateway enhances security. Since not all of our services are available for direct communication, hackers cannot exploit potential security vulnerabilities.

API Gateway works in a very simple way. Once API Gateway receives a frontend request, if needed, it splits this request into more requests and sends them to appropriate addresses. In this way, we were able to better manage the incoming traffic in our system and enhanced its security, as the majority of such tools can handle popular attack methods.

To access the system, a user needs to log in using Azure Active Directory.

App monitoring was based on Applications Insight, which gives detailed insight into how the app works. The planned cloud architecture is shown on the Fig. 1 below:

Fig. 1. Planned MS Azure cloud architecture

Managing the MS Azure environment

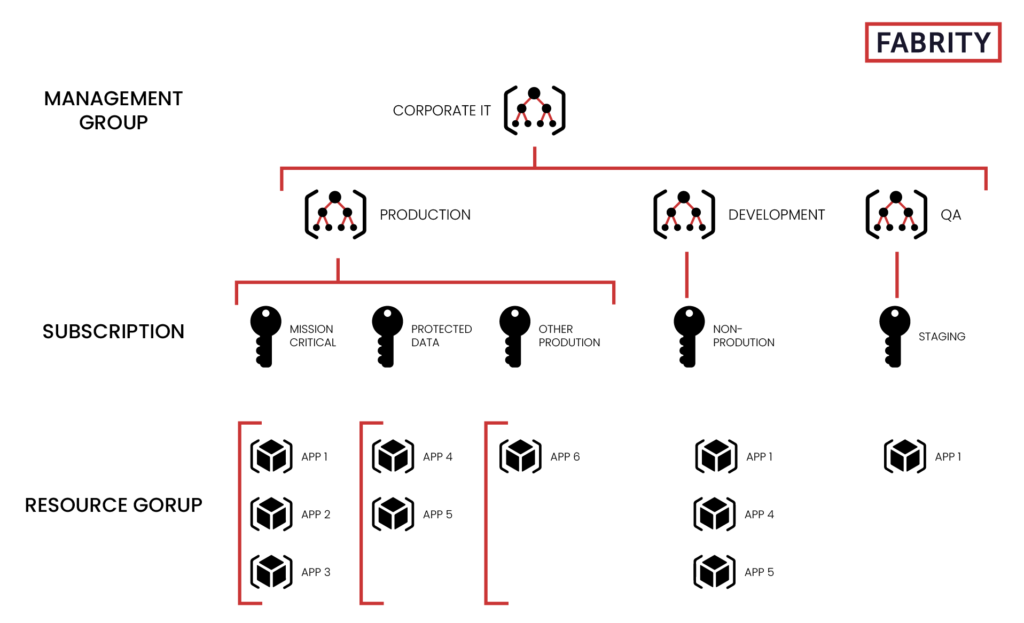

One of the main points to cover when building the cloud infrastructure was to define how subscriptions would be managed. We wanted to be able to logically separate different levels of permissions management, as well as the environments of different systems.

The best practice is to separate testing and production environments by creating separate groups, as shown below in Fig. 2. Such a configuration allowed the client to better manage user permissions and environment security.

Fig. 2. Managing subscription in the MS Azure environment

In this scenario, the slot mechanism in Azure App Service can be used for switching to a new version without interrupting the operations of the application. Should any problems with the deployment of the new version occur, this mechanism can be also used to save the last working version of the app.

When you start working in the Azure environment, it is good practice to define global policies on how to create artifacts. We recommend adding policies enforcing at least localization of created resources with a set of required tags. In this way, you will limit the possibility of creating resources in one region only (e.g., Western Europe), which is a common practice. Next, it is necessary to define the scope of tags. We recommend sticking to the minimum proposed by Microsoft.

Finally, a naming convention needs to be defined for the persons responsible for resource creation. We recommend following the approach proposed by Microsoft.

A clearly defined naming convention allows you to ensure naming consistency across the entire environment. In this way, you will be able to determine the type of resource or environment solely by its name. Second, it will be easier to create resources at the code level (using an infrastructure-as-code approach). Third, you will be able to quickly identify resources related to a specific project if you add its identifier to the resource name.

Code repository and CI/CD process

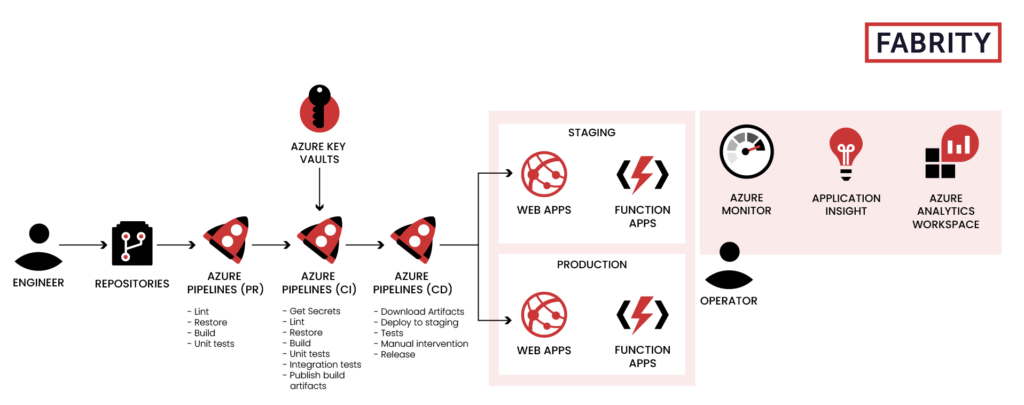

The next challenge to be faced was the question of where to keep the code and how to manage projects and deployments. Our client was hesitant about which code repository to choose: Azure DevOps or GitHub. The majority of the client’s code repositories were already on GitHub. On the other hand, GitHub Actions, the GitHub tool for creating CI/CD pipelines, at that time offered only limited possibilities for using the MS Azure functionalities. Therefore, we decided to join these two environments: GitHub and MS Azure.

The code and ARM templates responsible for launching specific Azure services were kept on GitHub. Additionally, we integrated GitHub with Azure Boards and Azure Pipelines. In this way, we were able to manage project tasks directly in Azure DevOps. In the same place, we were also able to monitor the processes of building and deploying the app.

When it comes to designing a CI/CD pipeline, we proposed a classical approach to working with MS Azure, as shown on Fig. 3:

Fig. 3. Designing a CI/CD pipeline using Azure DevOps (Source)

Using MS Azure cloud services for web app hosting

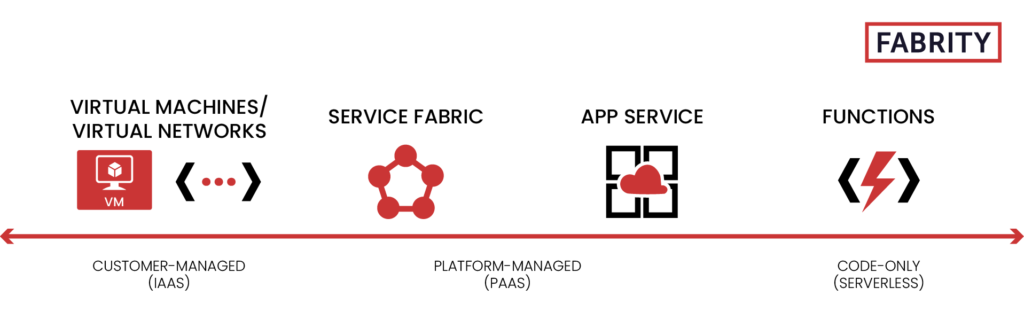

The process of cloud-based web app development is pretty much the same as in the case of on-premises development. We need to have a place to deploy and launch our app so that users can access it. In Microsoft Azure, we have several services that can help us with these tasks. See Fig. 4:

Fig. 4. Microsoft Azure services

We can launch our web app on a virtual machine and have it set up according to our preferences, as in the on-prem development. If you apply such an approach, it means that you will need to manage the virtual machine, its updates, and the entire system by yourself.

When building a web app, our main concern is very often limited to where it is launched. In this scenario, Azure App Service has important advantages providing us with a platform to deploy and launch our app. It takes from our shoulders the need to handle the ongoing operations of the system. At the same time, it makes it easier to scale up and down the app depending on our current workload.

Additionally, we have Azure Functions that work well when we have some parts of the system that need to be more independent, e.g., notification mechanisms, schedulers, or tasks related to the processing of large amounts of data.

Planning cloud infrastructure and development in Microsoft Azure—summary

The project was completed successfully.

We built a web app automating business processes at the client’s company, deployed it in the cloud using cloud services offered by Microsoft Azure, implemented automation through a CI/CD process, as well as provided advice on how to manage cloud resources effectively.

What is also important is that we came to several conclusions you should bear in mind when planning cloud infrastructure and development.

First, you need to prepare the cloud environment well by defining and enforcing policies that are compatible with your business model. In this way, you will be able to better control resource creation and usage, and thus control cloud spending. Uncontrolled creation of cloud computing resources that have been used for some time and then forgotten can lead to a substantial financial burden. This is the way to avoid it.

It is also important to choose tools and deployment scenarios according to the client’s needs and current infrastructure. Microsoft Azure offers lots of services that can be adjusted to particular needs. Therefore, it is good to take into account existing systems to be able to integrate environments and services.

Third, automate the app building and deployment by implementing CI/CD process, as it offers considerable benefits. Bugs are detected earlier and thus the final code quality is higher. Manual labor is reduced and with that also the number of human errors. You can also ship new functionalities to your clients faster.

Last but not least, when choosing cloud services, you need to find the right balance between your actual business needs, costs, and additional work, e.g., managing virtual machines can generate costs and labor that can be easily avoided if you choose Azure App Service.