One of the key aspects of digital transformation is cloud migration and modernization. In order to ensure greater business agility, more and more companies are migrating their on-prem applications to the cloud. Therefore, we have prepared this short guide on how to migrate to Azure cloud a web app.

Our guide is based on a true story, i.e., a cloud migration and modernization project we did for one of our clients in the pharmaceutical industry. They wanted to start using Azure cloud. This change would mainly be applied to newly created apps, but they also asked us to prepare a migration scenario for existing on-prem web apps. Since all the future software development projects were to be realized in the cloud, the cloud migration scenario was expected to include best practices related to the full life cycle of software development.

To prepare an Azure migration plan, we chose a web app handling business processes at the company. We decided to choose this particular one as its size was not that big, but at the same time it was complex enough to test different migration scenarios. Additionally, our client wanted to cut costs by replacing a costly license for a third-party database with an open-source solution.

Analyzing the environment and planning the migration

The first step was to audit the client’s environment and their system architecture. We asked them to share source codes and introduce us to the existing infrastructure. The app to be migrated was a typical.NET web app using Windows authentication and a third-party database. Once we gathered all the required information, we prepared an Azure migration plan that consisted of the following steps:

- Prepare a solution architecture in MS Azure cloud and technical assumptions.

- Modify the mechanisms that required changes in the app code, i.e., authentication, data access layer, and logging.

- Help the client to define the way cloud resources should be managed.

- Prepare MS Azure infrastructure and automated deployment processes using Azure DevOps.

- Deploy the app in the testing environment and confirm that project requirements were met.

- Perform fixes and system optimization.

- Deploy the app into production.

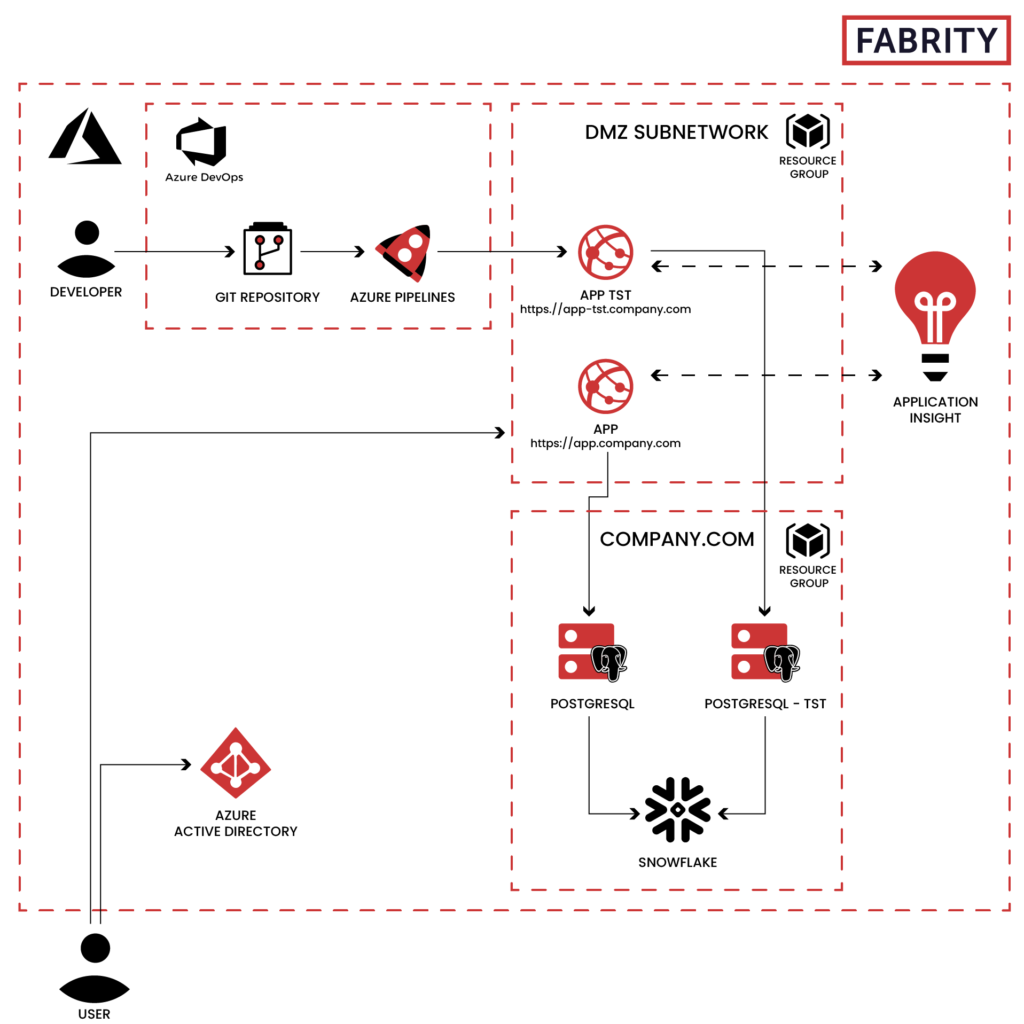

Fig. 1 Azure cloud architecture—a migration plan

Azure cloud migration—a database migration

Once the plan was accepted by the client, we started work.

The biggest challenge we needed to overcome was the database. Our client was considering migrating to Azure SQL database, but finally decided to use Azure Database for PostgreSQL, as it allowed them to reduce the solution maintenance costs. The only problem that the latter posed was the lack of a built-in step necessary to migrate a database schema within a deployment pipeline. Such a pipeline was needed in the code-first migration approach that we wanted to apply at first.

In the code-first approach, we first write the classes in the code that define the content of entities and the relations between them. Next, based on these classes we created a migration that builds a database. Each change in entities makes it necessary to generate a new migration that will update the database according to the model defined in the code.

Careful analysis, however, revealed that applying a code-first approach would be too time-consuming and would require a lot of changes in the app source code. Therefore, we decided to stick to the database-first approach and use the EDMX model, adding to it a connector that supported a PostgreSQL database.

An EDMX file is an XML file that defines the data model (Entity Data Model) used in the system. It is a description of a database schema and the relations between its entities. It also defines the way objects are mapped between the database and the EDMX model. An EDMX model is used in the model-first and database-first approaches. In these approaches, we first create a data model (designed from scratch from the database), then based on this model we automatically generate classes that are used later to interact with the database.

The connection of the EDMX file to the database did not go smoothly. We found out that the Npgsql plugin, an open-source ADO.NET Data Provider for PostgreSQL, to Entity Framework 6 (EF) did not support EDMX. Therefore, we needed to use a different plugin. Additionally, the Npgsql plugin was not handling database engine procedures well, so we needed to use the simplest approach for connecting ADO.Net.

The second biggest challenge to solve when migrating to Azure was index optimization. A scalable version of Azure PostgreSQL does not give the option of suggesting indexes. PostgreSQL itself requires the installation of additions (pganalyze).

Furthermore, when migrating the database, we could not migrate all of the indexes. So, after deploying the app in the testing environment, we needed to optimize the data layer. Additionally, the change of database impacted the app logic, as the old database and the new one differed in many ways, e.g., cursors worked in a different way. Still, we managed to solve this.

Last but not least, we needed to scale the Azure App Service of the app and optimize its code. We needed more cloud resources, so had to upgrade the service plan. Additionally, in order to ensure higher app responsiveness, we added multi-threading in some places.

Azure cloud migration—changing the authentication mechanism

Next, in order to ensure users had access to the app, we needed to change the authentication mechanism implementing Azure Active Directory (Azure AD), a Microsoft cloud-based identity and access management service. It was easy to implement because we just needed to register our app in Azure AD, add a few lines of code to the app’s code and configure the connection with Azure AD.

It is also important to bear in mind that the .NET Framework versions older than 4.7 by default do not support TLS 1.2, a widely used Internet protocol ensuring privacy and data security. Therefore, in this case we needed to change this configuration. Without this update, the authentication via Azure AD would not work, as it does not support older TLS versions any more.

Managing app performance

We used Azure Application Insights to manage application performance (APM) and monitor live web apps. Previously, our web apps had been using log4net—a library that helps programmers to store current data about what is happening in the system—and logging to a file. We wanted to send data about the app’s state directly to the Azure portal via Application Insights.

This change required us to update a few libraries used by log4net and add new references. We did not need, however, to change the app’s code, as we only needed to add appenders in the log4net configuration. In this way, app logs could be sent directly to Application Insights or to a text file in Azure Blob.

Automating the build and deploy process with Azure DevOps

We implemented the CI/CD processes using Azure DevOps. Previously, the client had been doing all deployments manually. We created processes allowing for building and deploying applications in the specific environments automatically.

Such an automated approach saves time, reduces the number of errors, and thus allows clients to save money. Taking into account the architecture used in this project, Azure DevOps was the best choice, as it has lots of functionalities that considerably speed up the build and deployment process of an app. Additionally, it is well integrated with Microsoft Azure.

Applying the infrastructure-as-a-code approach

Next, we created an ARM template, i.e., a file used by MS Azure to create a specific set of services in the cloud. In this way, based on the code we can create new environments automatically (infrastructure-as-a-code). Such a template contains a detailed description of the services we want to use and the connections between them. Moreover, this template can be parameterized, which makes is reusable across different environments.

In the case of services that depend upon one another, Azure Resource Manager is able to orchestrate resource creation, so that resources are created in the right sequence. In the infrastructure-as-a-code approach, it is easier to validate infrastructure correctness and security, as well as using policies, e.g., to manage resources.

Azure cloud migration of a web app—summary

Based on the client’s requirements, we prepared the solution’s architecture, which included not only app services and PostgreSQL but also described how to organize a cloud environment taking into account CI/CD processes and how to manage the development of cloud-based apps.

To sum up:

- It is relatively easy to migrate .NET web apps from on-prem to the cloud, but the migration process requires experience and a carefully prepared cloud migration strategy to mitigate potential risks.

- It is important to analyze the client’s infrastructure in order to be able to propose the correct cloud services and optimize costs.

- Wherever possible, use the mechanisms available on the Azure platform, e.g., Application Insight, as it allows you to expand the possibilities of your app with minimal costs.