About the service

Your data becomes an asset only when it is trusted, governed, and usable at scale. Fabrity deploys modern data platforms that integrate data across systems, enforce quality and security, and make information reliably available for analytics, machine learning, and GenAI use cases. We focus on production readiness—automation, monitoring, and operational handover—so your teams can deliver measurable outcomes, not experiments.

What we do

We deploy AI-enabled data platforms that turn enterprise data into a reliable foundation for machine learning, covering the full life cycle from ingestion to production operations.

AI-enabled data platform

Data ingestion and integration

Collect and integrate data from multiple sources across an organization.

Central data lake/data platform

A single, governed hub for storing and managing analytical and ML-ready data.

Data processing and standardization

Clean, transform, and standardize data to ensure consistency and quality.

ML training environment

Enable data scientists to explore data, train models, and manage experiments.

Production model serving

Deploy models reliably as APIs or batch jobs and scale them with demand.

Data and model monitoring

Monitor data quality, model performance, and detect drift in production.

Deployable on:

on-premises

What you get

For CIOs and CTOs

An enterprise-grade data and AI architecture designed for scalability, security, and long-term operability. The platform enforces governance, access control, and monitoring across all layers, providing full visibility into data flows, workloads, and machine learning assets. Managed infrastructure and standardized components reduce operational risk and total cost of ownership.

For CDOs and data and AI teams

A production-ready data platform with a centralized lakehouse, scalable processing, and integrated MLOps. Standardized ingestion, transformation, and feature pipelines accelerate experimentation and model development. Automated deployment, versioning, and monitoring enable a reliable transition from research to production.

For business and product teams

Operationalized machine learning embedded into analytics and applications. Models are deployed as scalable services, continuously monitored, and retrained based on data and performance signals. This ensures measurable business outcomes instead of isolated proof-of-concepts.

What challenges we solve

Your organization generates large volumes of data, but the data is not operationalized.

Despite significant investment in data collection, datasets are not structured, standardized, or ready to support analytics and AI workloads.

The data landscape has grown organically and lacks architectural coherence.

Data is distributed across multiple systems, formats, and teams, making integration complex and slowing down access to reliable, trusted data.

Data pipelines exist, but they are fragile or heavily manual.

Ingestion and transformation processes are difficult to scale, monitor, and automate, increasing operational risk and limiting the ability to deliver data consistently.

Machine learning initiatives remain isolated and experimental.

There is limited capability to move models from notebooks into production environments with proper deployment, monitoring, and governance.

As a result, AI adoption is blocked at the platform level.

Data quality issues, missing standards, and insufficient data and ML infrastructure prevent the organization from scaling AI beyond proofs of concept.

Who we work for

Our approach: from data foundations to production AI

Data foundations

We design and implement data platforms that turn raw, distributed data into a reliable analytical foundation. Data is ingested, standardized, and processed using automated ELT and ETL pipelines, following proven lakehouse patterns such as Bronze, Silver, and Gold layers. This ensures data quality, scalability, and consistent access across the organization.

Machine learning environment

Building on the data foundation, we create environments for data exploration and machine learning development. Data teams can efficiently perform feature analysis, train models, and run controlled experiments. Built-in experiment tracking and versioning enable reproducibility and collaboration across teams.

Production model operations

We operationalize machine learning models and integrate them into business processes. Models are deployed as APIs or batch jobs running on scalable and resilient runtime environments. Continuous monitoring, quality checks, and automated retraining loops ensure models remain accurate and reliable over time.

Governance and security

Across all layers, we implement enterprise-grade governance and security. Access control, permissions, and metadata management provide transparency into data and model usage. Full auditability and traceability support compliance requirements and give stakeholders confidence in the platform.

Microsoft Azure—data and machine learning platform

Microsoft Azure enables organizations to build modern, scalable data platforms fully integrated with the Microsoft ecosystem. It provides a reliable foundation for collecting, storing, and processing data from multiple sources while meeting enterprise requirements for security and governance.

Using Azure’s native data services, we design lakehouse architectures that support both batch and real-time analytics. Distributed processing and cloud-native storage allow data teams to work efficiently with structured and semi-structured data, delivering trusted datasets for reporting, analytics, and downstream applications.

Azure also offers strong machine learning capabilities, allowing models to move smoothly from experimentation to production. Managed ML services, integrated MLOps, and scalable deployment options make it easier to operationalize machine learning and embed data-driven intelligence into business processes.

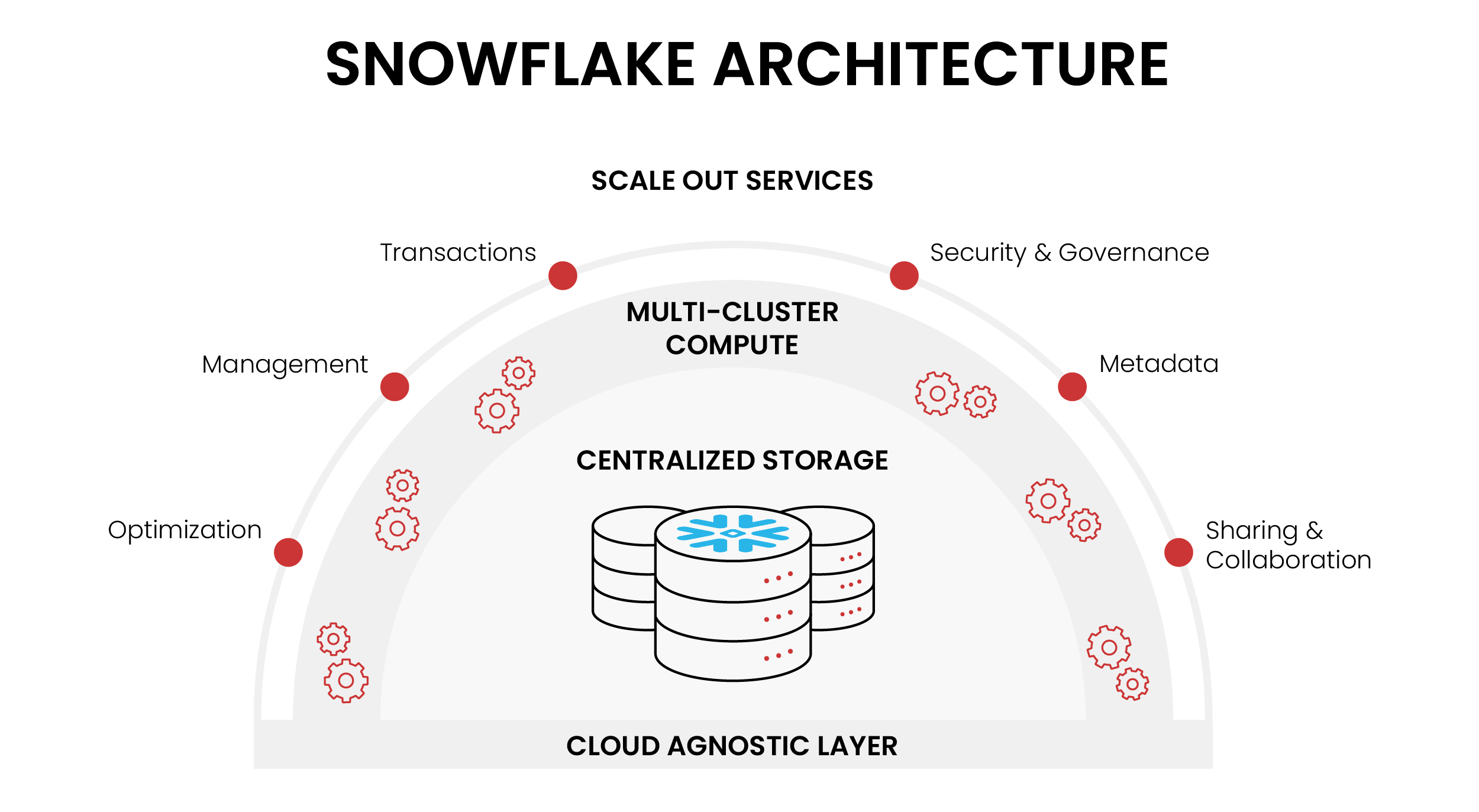

Snowflake—a modern cloud data platform

Snowflake is a cloud-based data platform that enables organizations to store, process, and analyze massive volumes of data efficiently. Built natively for the cloud, Snowflake seamlessly scales to meet your computing and storage needs, ensuring you pay only for what you use.

A key innovation is the separation of compute and storage, allowing independent scaling of processing power and data volume. Supporting structured and semi-structured data like JSON, Snowflake has the versatility to work across various use cases.

Its robust SQL engine enables fully parallelized queries for faster processing, even on complex datasets, while smart caching delivers near real-time analytics. Compute resources can be isolated and scaled as needed, ensuring consistent performance across teams.

Snowflake also simplifies secure data sharing within organizations and with external partners. Integrating with numerous analytics tools, it provides an end-to-end solution for data warehousing, lakes, and analytics in a fully managed, secure cloud environment.

Why work with us

End-to-end delivery from discovery through production operations

Governance-first design (security, auditability, lineage)

Cloud and on-prem experience (Azure, Snowflake, hybrid)

Production-grade engineering (CI/CD, testing, observability, reliability)

Partnership expertise in Microsoft and Snowflake ecosystems

Seamless integration with existing ecosystems (ERP/CRM/legacy systems, APIs, and enterprise IAM without disruption)

How we work

00.

Discovery and business analysis (recommended)

Align on business goals, assess available data, and define whether and how AI/ML should be applied before starting implementation.

01.

PoC (proof of concept)

02.

MVP (minimum viable product)

03.

Deployment

04.

Scaling and governance

Other technology stack

Cloud data platforms:

Databricks

Data engineering and processing:

Azure Data Factory

Storage and formats:

Delta Lake

Azure Data Lake Storage

Machine learning and MLOps:

Azure Machine Learning

Deployment and serving:

Kubernetes

Business intelligence:

Power BI

Need support with your data?

Get in touch to see how we can help.

Book a free 15-minute discovery call

Let’s talk to see how we can help.

Blog

Industrial Internet of Things (IoT) trends for 2026

Explore IoT trends for 2026: LoRaWAN connectivity, AI-powered predictive maintenance, smart energy management, computer vision, and IIoT cybersecurity.

Industrial data acquisition for energy management—how we do it at Fabrity

Industrial data acquisition for energy management in brownfield factories—connect meters, close gaps, and turn readings into KPIs with Nexen Suite.

Internet of Things (IoT) security: A challenge for 2026

IoT security is a growing challenge for manufacturing in 2026. Learn key threats, compliance with NIS2, and best practices to secure IoT infrastructure.