Large language models (LLMs), despite their capability to generate human-like content and for natural language understanding, have significant drawbacks that hinder their wider adoption in business scenarios. First, LLMs tend to hallucinate answers, presenting false statements as facts. Second, they raise serious privacy concerns, as companies are reluctant to share their data with LLMs, fearing it could be used to train publicly available models. Third, access to actual data is crucial in business scenarios, but LLMs cannot provide that due to the way they are trained.

However, LLM-powered virtual assistants can yield considerable benefits in terms of productivity, and ways to address these challenges have been found. The first is a retrieval augmented generation (RAG) mechanism that enables users to receive answers grounded in verified knowledge, helping to curb LLM hallucinations. This capability makes RAG ideally suited for knowledge management in enterprises struggling to harness their internal documentation, which is often scattered across different systems and difficult to search.

For privacy and security concerns, the solution is straightforward: use a trusted cloud services provider. A great option is Microsoft Azure, which not only enables the use of state-of-the-art LLMs from OpenAI but also ensures enterprise-grade security and guarantees that your company data will not enter the public domain.

When accessing actual data is paramount, such as for a sales team that needs customer data from a CRM, one option is the function calling feature. This refers to the ability of LLMs to execute specific functions or tasks that extend beyond generating text. This capability allows these models to interact with external systems, software, or databases. In practice, it can power a chatbot that accesses real-time financial data via an API to provide users with stock price updates or market trends, or a weather bot that checks the forecast in a specific location.

This demonstrates that LLMs alone are not sufficient to harness the transformative power of generative artificial intelligence in business. It is the context in which they operate—external systems, applications, and databases—that makes the difference. In this article, I will describe three real-life examples of AI assistants built by Fabrity that combine conversational AI technology with other systems to deliver considerable business benefits.

Read more on AI

Finding the best LLM—a guide for 2024

Small language models (SLMs)—a gentle introduction

Generative AI implementation in business: how to do it responsibly and ethically

AI in manufacturing: Four nonobvious implementations

Ten business use cases for generative AI virtual assistants

Generative AI in knowledge management—a practical solution for enterprises

Is ChatGPT dreaming of conquering the world? Generative AI in business

The power of generative AI at your fingertips—Azure OpenAI Service

Unleash your superpowers with new Power Platform AI capabilities

Curbing ChatGPT hallucinations with retrieval augmented generation (RAG)

Large language models (LLMs)—a simple introduction

RAG vs. fine-tuning vs. prompt engineering—different strategies to curb LLM hallucinations

AI virtual assistant in the heavy machinery industry

Knowledge management is a classic use case for large language models combined with a retrieval augmented generation (RAG) pipeline. As virtually every enterprise generates an enormous number of documents, such a solution can be applied across various industries, including manufacturing, financial services, and automotive. For one of our clients, a global manufacturer of heavy machinery, we developed a similar system to help them manage their extensive documentation.

They faced the challenge of handling an extensive array of documents covering all their products: operator manuals, technical specifications, safety manuals, maintenance and repair manuals, product data sheets, training materials, and warranty documentation. These documents, created by various teams, such as the production, maintenance, marketing, and legal departments, were scattered across different systems. Additionally, many documents were available in multiple versions, which complicated the task of finding relevant, accurate, and up-to-date information.

This limited access to organizational knowledge negatively impacted employee productivity and satisfaction. Maintenance technicians had to consult various materials before performing any repairs, and the sales team struggled to find the latest technical specifications to provide to customers. In short, all internal processes were more complex due to this issue.

For new hires, this situation meant a steep learning curve. In their first months, they needed to absorb vast amounts of information about the company and its products, services, and internal procedures. Such a dispersed knowledge base across different departments and systems prolonged the onboarding process and made it less effective. As a result, the company had to invest more in training new employees before they became fully operational.

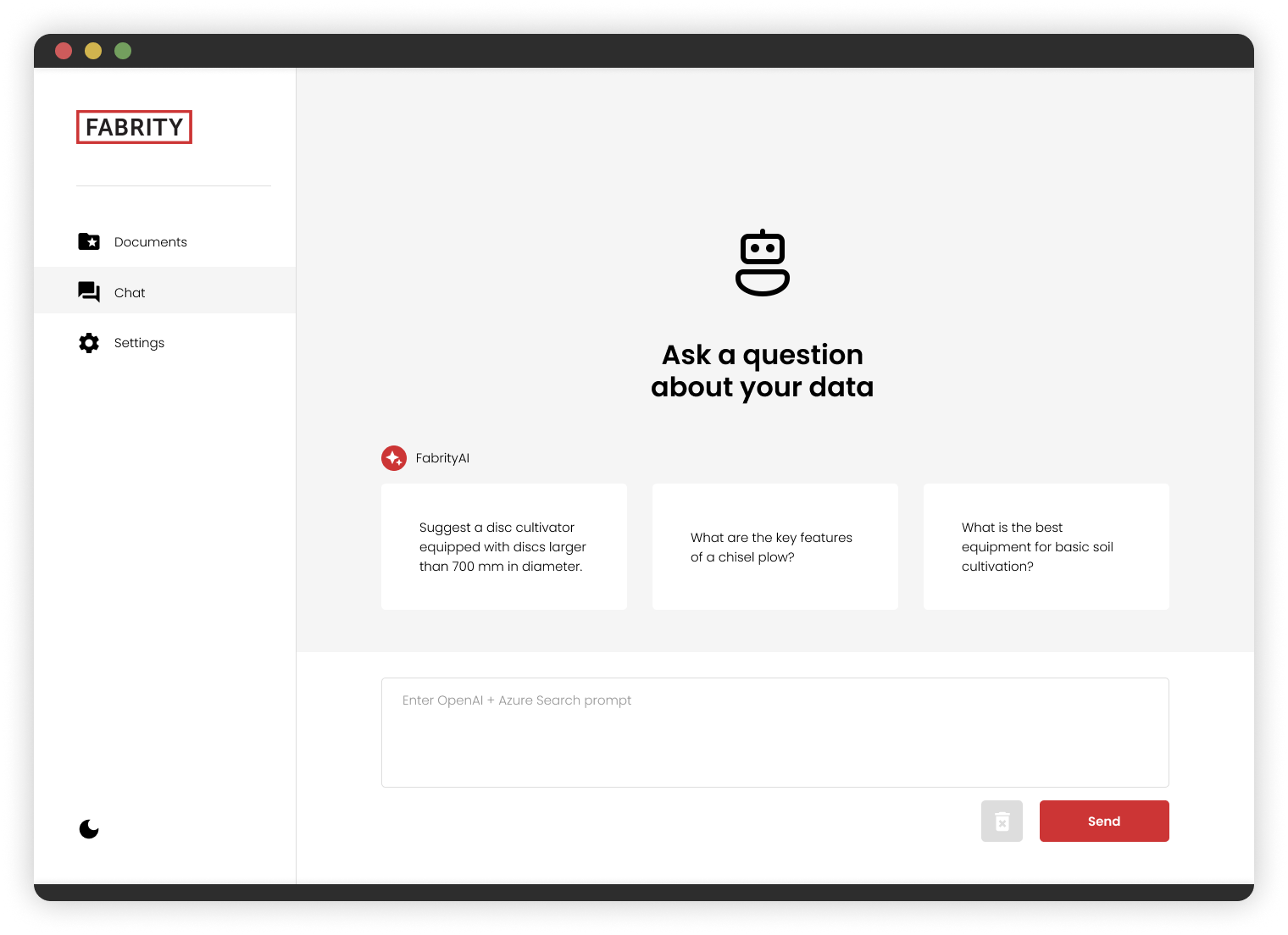

To address these challenges, we built a knowledge management solution utilizing a retrieval augmented generation (RAG) mechanism. This approach enhances the natural language processing capabilities of generative language models by combining them with a retrieval component. Essentially, we created an AI-powered solution that allows you to upload large sets of documents and ask natural language questions about their content using a chatbot interface. This enables you to search through large, dispersed sets of data to find precise answers to your queries, even very complex ones. See Figure 1 below:

Fig. 1 AI-powered personal assistant for knowledge management

What is crucial here is that RAG ensures that employees consistently receive the correct answers grounded in verified sources. If a question is beyond the scope of the data used to build the RAG pipeline, unlike ChatGPT, our solution does not attempt to guess the answer but informs the user that the answer cannot be found in the provided documents. ChatGPT and other generative AI solutions (like Google’s Bard) are stochastic models, meaning they generate the most probable answer based on the training data, which does not necessarily mean it is the correct one. In contrast, our solution knows the boundaries of its knowledge based on the company’s documentation and will not attempt to provide an answer not found in the documents.

Additionally, data confidentiality is ensured, and our client does not need to worry that their internal company documents will become part of publicly available LLMs. This is achieved by separating the knowledge base, where all documents are stored, from an LLM that serves only to understand the user query and generate the answer. In other words, the LLM acts as an intermediary that securely accesses data stored on the Azure infrastructure, ensuring enterprise-grade security.

Last but not least, while the majority of the documents were in English, the LLM can handle conversations in any language. This means users can ask questions in their native language and receive accurate answers regardless of the language of the original documents.

Our client was pleased with the proof of concept (PoC) we built for them and asked us to develop it further by adding a voice recognition feature, allowing employees to ask questions verbally. Additionally, since the PoC had a limited scope and included only the product catalogs, we are now planning to include all company documentation to make our solution a company-wide personal assistant that helps employees find relevant information.

AI virtual assistant in pharma

Due to its stringent regulations, the pharmaceutical industry presents a significant challenge for custom software development companies. Not only must you develop a software solution that delivers tangible business benefits, but you also need to ensure its compliance with a comprehensive set of norms and regulatory requirements. Consequently, all technical innovations are approached with considerable caution in this sector. However, AI can also demonstrate its transformative potential in this regulation-intensive industry.

To illustrate this, consider our AI personal assistant that recently streamlined the sales of medicines for one of our pharmaceutical clients. They sought to improve the process of ordering medicines for B2B customers, including chemists, pharmaceutical distributors, and wholesalers. Traditionally, customers placed orders over the phone, and sales agents manually took and registered these orders in a separate system. This process was time-consuming, tedious for both customers and agents, and prone to errors.

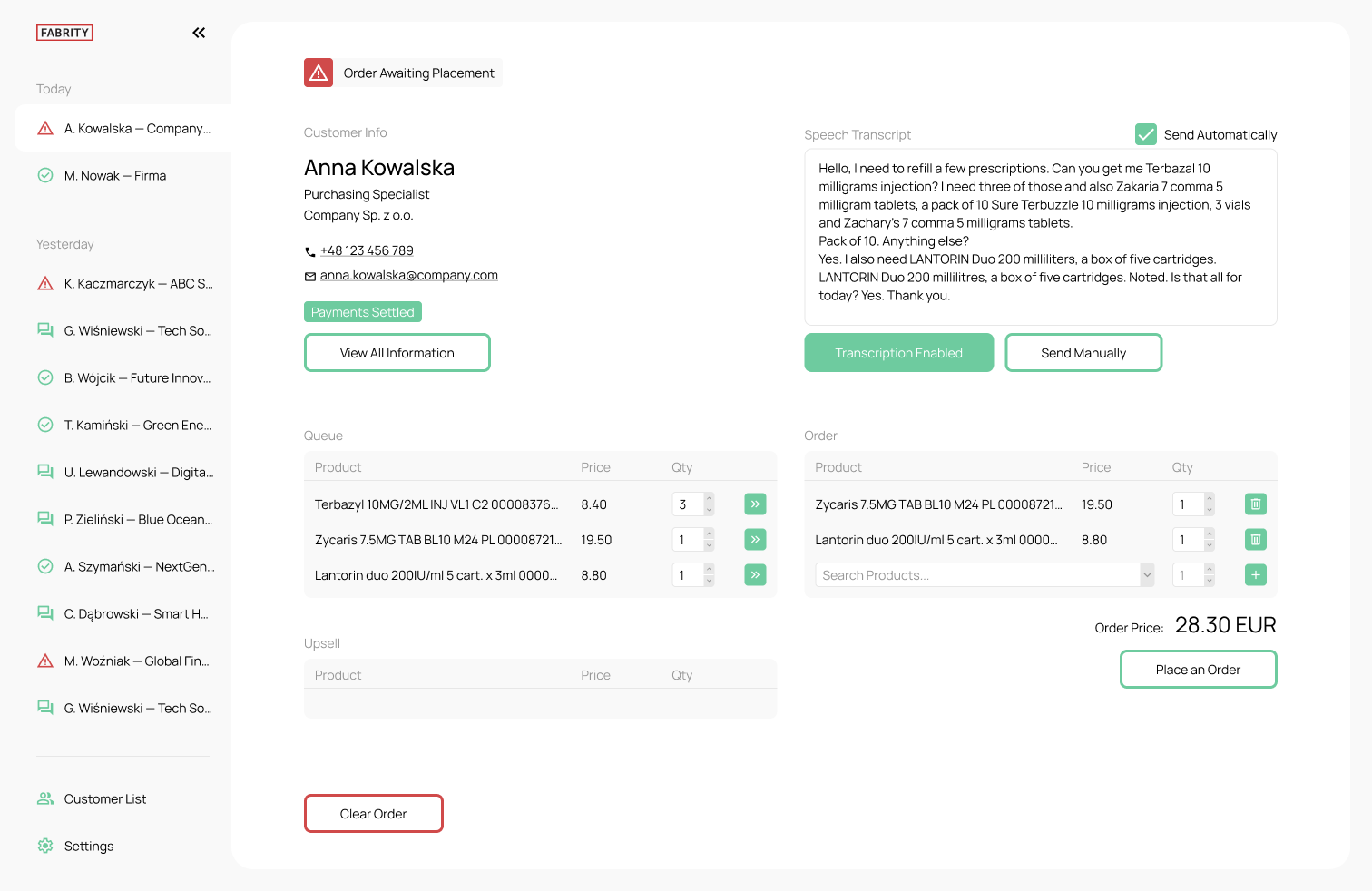

To tackle this challenge, we developed a virtual assistant that combines natural language processing algorithms for speech recognition with LLMs. The new, streamlined process works as follows: when a pharmacist calls to place an order, the virtual assistant identifies the caller by name and displays their customer record from the CRM database. It also alerts the agent to any overdue payments.

The entire phone conversation between the pharmacist and the sales agent is automatically transcribed. A custom dictionary containing all medicine names enables the speech-to-text mechanism to recognize the names of medicines (highlighted in capital letters) and log them along with their dosages and forms (such as pills, capsules, syrup, injections, etc.) in the queue window.

Moreover, even with a custom dictionary designed to recognize specific product names, the speech recognition mechanism may sometimes fail. This is where the LLM comes into play, detecting such issues and, based on the list of all medicines provided (e.g., a product catalog from the pharma company), suggesting the correct name of the medicine ordered by the pharmacist.

The sales agent then verifies the correctness of the recognized items in the queue and places them in the order form. If the virtual assistant fails to recognize a product name, the agent can search for the product in the database using the search option available in the order form.

Importantly, our virtual assistant also displays upsell opportunities in a separate window, allowing sales agents to offer additional products to customers. This not only creates a new revenue stream but also streamlines the work for agents by suggesting what to propose to the customer.

Once everything is verified, the sales agent can finalize the order. See Figure 2 below:

Fig. 2 AI virtual assistant for smarter sales in pharma

The project is currently at the pilot stage. We have constructed a PoC that is being tested by three selected agents from the client’s sales team. The initial results are promising, demonstrating a significant increase in productivity. Specifically, there is an increase in the number of calls that agents can handle during a workday.AI assistant for data analysis in the manufacturing industry

One exemplary application of an AI personal assistant in data and industrial IoT involves a manufacturing client who required a solution for equipment wear measurement to facilitate predictive maintenance. To determine whether a machine is functioning correctly, vibration sensors are employed to measure tri-axial vibrations (in mm/s). If the vibration levels exceed the prescribed range, it indicates a potential fault, necessitating a machine inspection. Accordingly, the client requested a solution capable of accessing and analyzing data from the plant’s vibration sensors to identify and flag anomalies. Ideally, the solution should also list the possible root causes of detected anomalies and suggest what needs to be checked and potentially repaired.

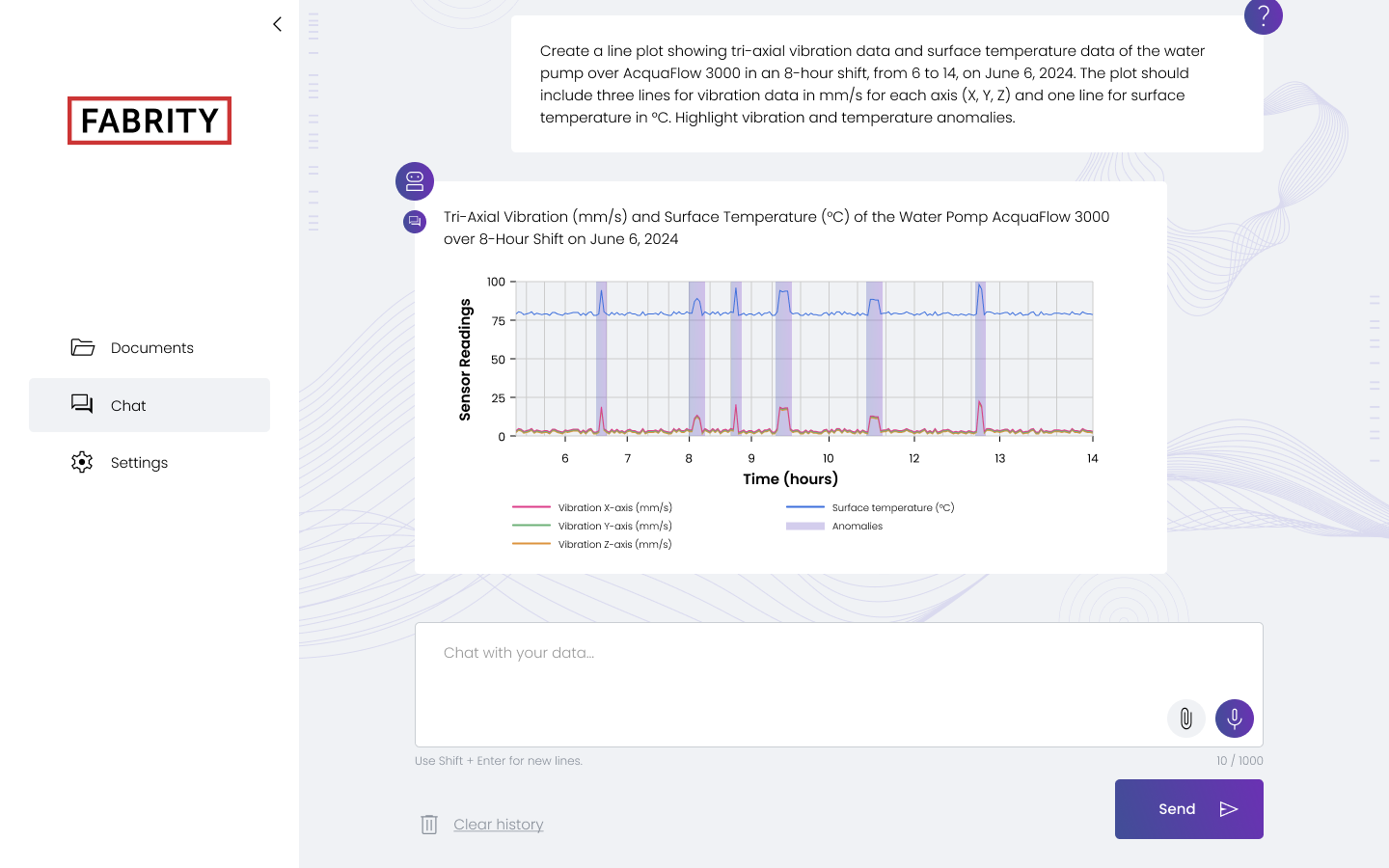

This was an ideal use case for generative AI, as large language models excel at data analysis. We developed a PoC that combines an LLM-powered chatbot with data from IoT vibration and temperature sensors, along with a RAG pipeline to access verified knowledge. To simulate real-world conditions, we used synthetic data generated by AI, which included both the operator’s manual for a fictional water pump, AcquaFlow 300, and sensor readings. In real-world scenarios, IoT sensor data would be sent to a cloud database via an API. For data confidentiality, we could leverage Azure’s enterprise-grade security infrastructure.

Here is how our solution works. Whenever a user, such as a plant manager, poses a question about the data, the LLM generates a Python script that retrieves this data from the database and visualizes or analyzes it as requested by the user. Although this process might seem straightforward, it was technically challenging to implement. We had to teach the LLM to recognize when a question pertains to data and determine what data should be used to respond. Additionally, we instructed the model on how to draw conclusions from this data, such as responding to queries about the anomalies for all machines in the factory or deciding which chart type to use. The result of such a query related to data from vibration and temperature sensors over a specified period is shown on Figure 3 below:

Fig. 3 AI personal assistant for data analysis and visualization

In our case, the data was related to tri-axial vibrations and temperature, but we can utilize any type of data coming from IIoT sensors. The only requirement is that it must be gathered and sent via API to the cloud.

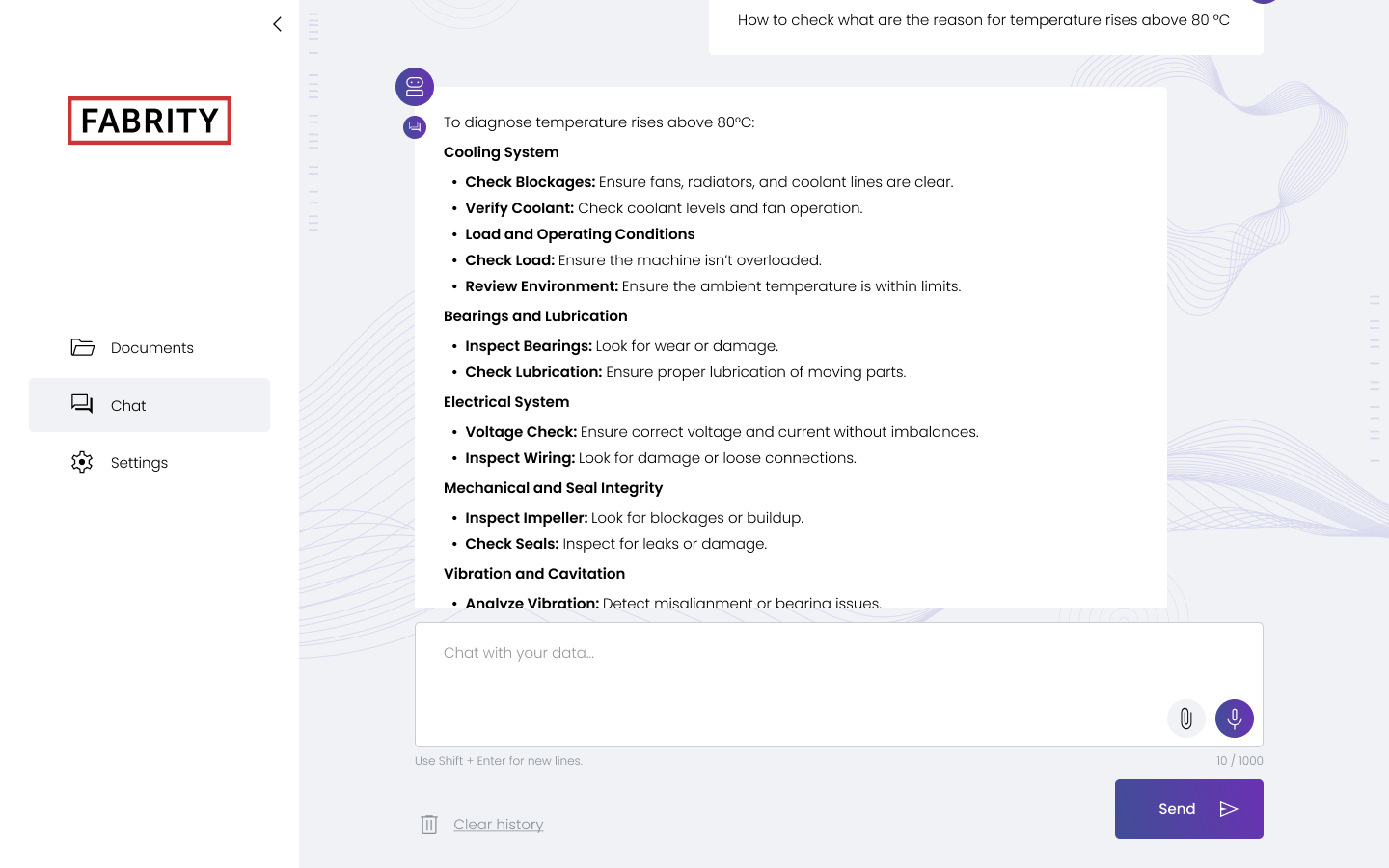

More importantly, our AI-powered solution can advise users on potential root causes of detected anomalies. For instance, when we asked the assistant about possible reasons for temperature rising above 80°C, it provided detailed answers with likely causes and actions for maintenance technicians to take. This is made possible by integrating the AI assistant with a RAG pipeline, which supplies the necessary knowledge to accurately respond to user queries. See Figure 4 below:

Fig. 4 AI assistant identifying anomalies root causes and providing technician guidance

Our AI-powered assistant offers several benefits for both plant managers and technicians. First, it flags anomalies, enabling technicians to take corrective action before a machine breaks down. Second, the data collected from sensors can be analyzed to determine when maintenance is needed, making it an ideal solution for predictive maintenance. Finally, the AI assistant provides technicians with detailed guidance on how to address specific anomalies.

We conducted a demonstration of our PoC for the client, and their feedback was overwhelmingly positive. We plan to initiate a pilot project where we will implement our assistant in the factory for a limited number of machines and data sets to see how it performs in a real-world environment. We are hopeful for the success of this project, as it holds tremendous potential and could revolutionize the way in which factories are managed, paving the way to the Industry 4.0.

AI-powered virtual assistants in your company

All these three examples of AI virtual assistants show that the Holy Grail of generative artificial intelligence in business lies in combining LLMs with external systems and databases to build one AI-powered ecosystem in the company. LLMs are becoming a commodity available for everyone and no longer constitute a competitive advantage on their own. What makes LLM-powered systems really powerful is the data under the hood that allows them to give verified, hallucination-free answers and to perform more complex tasks such as data visualization and analysis.

As you can see at Fabrity, we have a number of generative AI projects under our belt, each at different stages of implementation—from proofs of concept and pilot stages to full-scale deployments. If you are considering building your own AI-powered virtual assistant, we are here to help. The process is as follows:

- We analyze your business requirements.

- You gather the necessary data (technical documentation, knowledge bases, IIoT sensor data, etc.)

- We prepare the Azure infrastructure.

- We build a dedicated PoC for you.

- We test and optimize it for performance.

Once the data or documentation is ready, we can build your custom AI assistant in 2–3 weeks, if there are no specific requirements. Drop us a line at sales@fabrity.pl, and we will contact you to discuss all the details.

If you need assistance building your industrial IoT network from the ground up, we can create a complete solution that covers all layers of IoT infrastructure—from sensors and gateways to edge devices, cloud platforms, and custom software for industrial and manufacturing data analysis.